This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Samie Mostafavi, ssmos, KTH Royal Institute of Technology;

(2) Vishnu Narayanan Moothedath, vnmo, KTH Royal Institute of Technology;

(3) Stefan Ronngren, steron, KTH Royal Institute of Technology;

(4) Neelabhro Roy, §nroy, KTH Royal Institute of Technology;

(5) Gourav Prateek Sharma, gpsharma, KTH Royal Institute of Technology;

(6) Sangwon Seo, sangwona, KTH Royal Institute of Technology;

(7) Manuel Olgu´ın Munoz, [email protected], KTH Royal Institute of Technology;

(8) James Gross, jamesgr, KTH Royal Institute of Technology.

Table of Links

- Abstract & Introduction

- Testbed Design and Architecture

- Experimental Testbed Validation

- Supported Experimentation

- Acknowledgements & References

II. TESTBED DESIGN AND ARCHITECTURE

The ExPECA testbed is designed to address two primary challenges pervasive in edge computing research: 1) endto-end experimentation, 2) ensuring repeatability and reproducibility. To enable end-to-end edge computing experiments

that encompass both communication and computation aspects, ExPECA is engineered with the following primary goals:

- Provision communication components by utilizing a diverse array of wireless and wired links.

2) Executing and overseeing computational elements within containerized environments running on the testbed infrastructure.

These objectives are realized through an architectural framework depicted in Figure 1. Central to this architecture is a versatile network fabric that ensures IP connectivity among different experimental components, which may include servers located in the cloud or at the edge, as well as wireless terminals.

Distinctive design choices have been made to realize these objectives, distinguishing ExPECA from contemporary solutions. We here describe these choices and their impacts.

A. Location and Physical Environment

The testbed is located in KTH R1 hall, an experimental facility 25 meters below ground. This isolated location offers a controlled environment with minimal radio interference, making it ideal for running reproducible wireless experiments. With dimensions of 12 meters in width, 24 meters in length, and 12 meters in height, the facility is spacious enough to accommodate a variety of remotely controlled devices, such as drones and cars, providing researchers with considerable freedom in designing their experiments.

B. Control and Management Software

We adopted CHI-in-a-Box as a baseline testbed implementation with extensions and contributions from our team. CHI-in-a-Box is a packaging of the CHameleon Infrastructure (CHI) software framework which is built primarily on top of the mainstream open-source OpenStack platform. CHI has mature support for edge-to-cloud provisioning of compute, storage, and network fabrics, and provides end-to-end experiment control and user services. Specifically, we leverage the CHI@Edge flavor of the framework, a novel version that supports running containerized workloads on compute resources. In the following, we describe the essential elements of CHI. Users have the flexibility to allocate resources either on-the-fly or through advance reservations, facilitated by customized versions of OpenStack’s Blazar and Doni services. These services have been tailored by the ExPECA team to include unique features such as radio and Kubernetes node reservations. The scope of allocatable resources encompasses worker nodes, radios, networks, and IP addresses. Once resources are secured, the integrated Kubernetes orchestration system enables the deployment of containerized workloads via OpenStack Zun. Users have the option to utilize preconfigured Docker images supplied by the ExPECA team (as in the case of SDR RAN) or to upload their own container images. Notably, the ExPECA team has extensively modified Zun to support functionalities like network interface and block storage attachments to K8S containers, features not originally supported in CHI@Edge. Configuration of complex experiment topologies such as distributed networking experiments is supported through CHI’s programmable interface and our adopted flavor of the python-chi Python library. In addition to these, OpenStack Neutron plays a pivotal role in managing the network architecture. Neutron provides a robust set of networking capabilities, including virtual networking, that allows for the creation of isolated networks, subnets, and routers. We connect all testbed components, including radios, to managed Layer 2 switches controlled by Neutron.

C. Radio Nodes

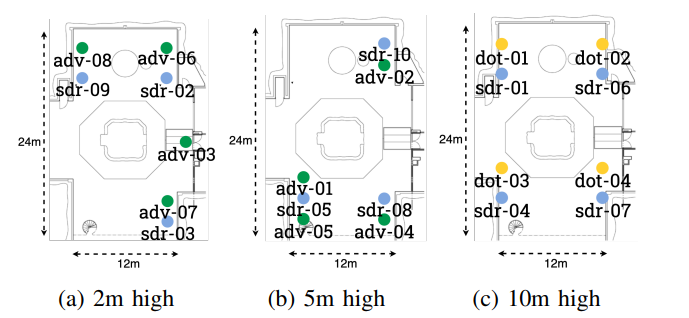

One of the standout features of ExPECA is the inclusion of wireless terminals as reservable resources. These radios are mounted at diverse locations in R1, at three different levels, as shown in Figure 3. They are categorized into two types:

- SDR Nodes: These can serve as the air interface for any wireless protocol implemented for USRP E320 SDRs, including OpenAirInterface (OAI) 5G, OAI LTE, SRS LTE, Mangocomm IEEE802.11b/g, or any GNU radiobased wireless protocol.

2) COTS Radios: Includes an Ericsson private 5G system with radio dots and Advantech routers serving as 4G/5G COTS UE nodes.

We chose all radios, specifically SDRs, to be equipped with IP-networked interfaces. This allowed us to enroll them as network segments in Openstack Blazar and Doni with minimal extension efforts. The users can easily integrate them into any workload container or SDR host container, through the testbed software. For example, researchers can seamlessly transition from a high-quality 5G link between locations sdr-01 and sdr-06 to a poor-quality channel between sdr-09 and sdr-07, enabling them to empirically assess the impact on application performance. Alternatively, they can switch to a WiFi link between sdr-01 and sdr-06 for further experimentation.

D. Compute Nodes

ExPECA offers compute resources by allowing users run containerized applications with the most recent version of Openstack Zun that uses K8S (Kubernetes) as the orchestration

layer. K8S is designed to handle a large number of containers across a variety of environments, making it well-suited for complex, large-scale deployments. Also, it offers a rich set of APIs and a pluggable architecture, allowing for greater customization and extensibility. This was particularly useful for our team when developing new desired features in Zun. These new features include 1) network attachment, 2) block storage attachment, 3) resource management i.e. specifying the number of cores and memory size for the container. Users are able to run their containerized applications on ExPECA’s Intel x86 servers, enrolled in the K8S cluster, which we refer to as ’workers’, not only to deploy and orchestrate application workloads, but also to deploy SDR communication stacks or apply radio node configurations. With the advent of Open Radio Access Network (O-RAN) and SDRs, all components of the wireless network stack can run on general-purpose processors. They can thus be containerized and distributed across multiple hosts as long as the containers are connected to the SDRs. We provide reference container images for SDR 5G, SDR LTE, and WiFi provisioning. Lastly, it is important to note that all worker nodes achieve sub-µs synchronization by exchanging PTP messages with a Grandmaster (GM) clock that itself uses GPS as a reference. Tight time synchronization is essential for users who require the same timing reference to timestamp packets across various locations within the testbed.